It was not so long ago that a publication’s reputation was its livelihood. Bastions of journalism like the BBC, the Wall Street Journal and the New York Times thrived on hard-earned trust thanks to their constant dedication to the facts. But as journalism has grown faster, so too has the readers’ penchant for a sense of impulsiveness in their news. The instantaneous connection between writer and reader has led to the rise of the viral headline — bite-sized caricatures of actual events, meant to be read and shared as quickly as possible. Even for the dedicated newsreader, impartiality is quickly becoming a commodity of the past. And the vast increase of information in the last five years has given rise to more and more statistics that may be true on paper but only tenuously hold up in practice.

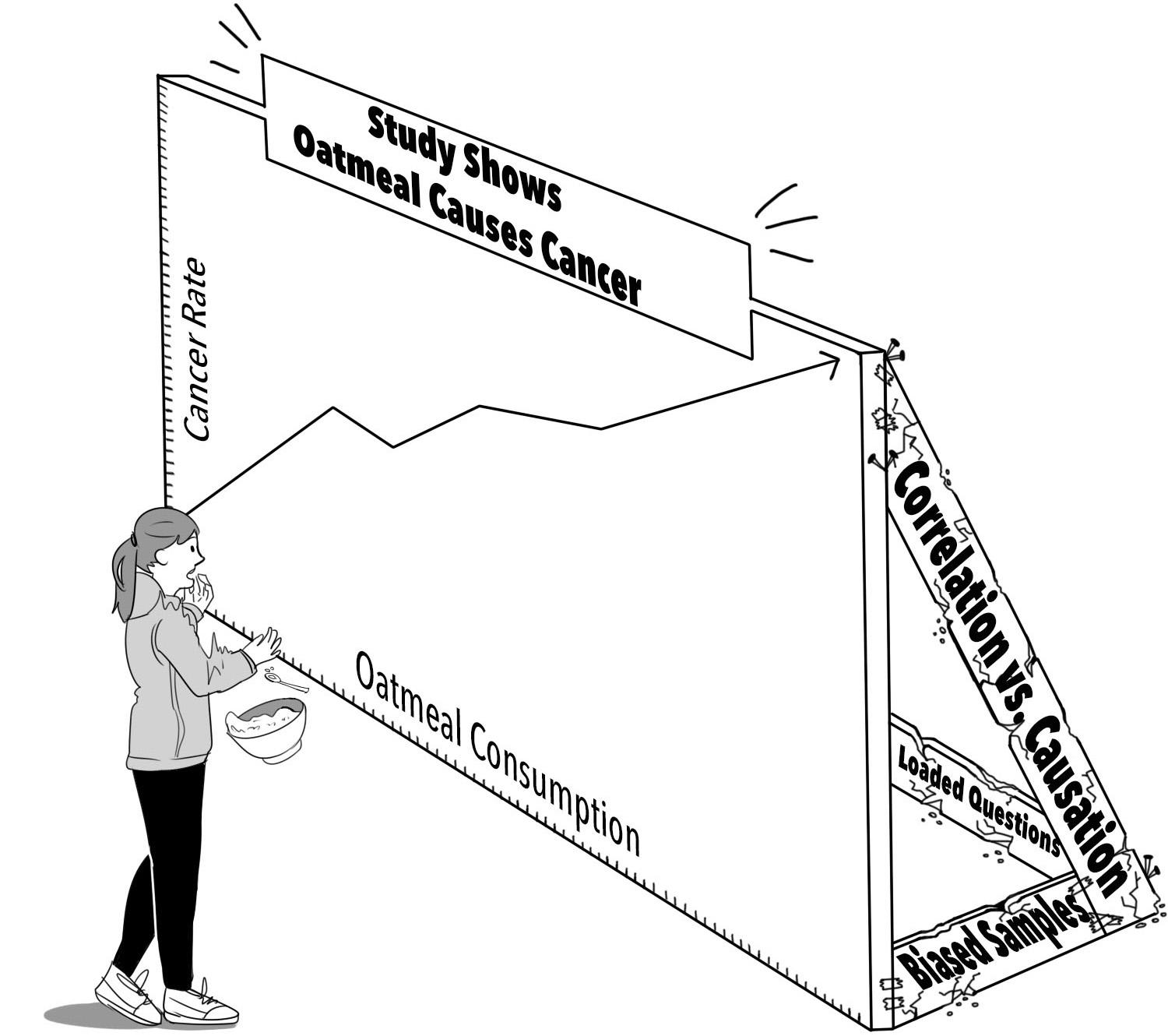

A large proportion of these readers are teens who, through social media platforms like Facebook and Twitter, are exposed to hundreds of statistics a day from sources like Buzzfeed and The Wall Street Journal. And with this mass consumption of media lies the high risk of reading false statistics without even knowing it.

“If you’ve never had any sort of background on what data analysis looks like, it’s hard to expect to be able to interpret things meaningfully and see the flaws that are present,” says Scott Friedland, a Palo Alto High School AP Statistics teacher.

This statistical illiteracy poses many problems to teens and adults alike. According to Oregon Health and Science University Biostatistics Professor Jodi Lapidus, it is important to be able to interpret statistics before making decisions based off of them, especially major ones like voting and lifestyle changes.

“I tell my students: the computer can compute statistics for you, but it doesn’t make the decisions about how to interpret the results,” Lapidus says. “But synthesizing information you hear in the media and applying it to your own life — that is your job.”

A 2008 study by John Bohannon, a science writer based out of Harvard University, revealed the pitfalls of the media misquoting statistics and found that many academic journal publishers were not even trying to be truthful. Bohannon conducted an experiment with 16 volunteers to test the effects of a diet with and without chocolate, and found a statistically significant weight loss in the chocolate group. But the experiment was purposely flawed to create results. Due to the small sample size, Bohannon could rest assured before the experiment started that he would find some sort of trend, and the rest was as easy as sending it to the presses.

“We needed to get our study published pronto, but since it was such bad science, we needed to skip peer review altogether,” Bohannon says. “Conveniently, there are lists of fake journal publishers. Since time was tight, I simultaneously submitted our paper ‘Chocolate with high cocoa content as a weight-loss accelerator’ to 20 journals. Then we crossed our fingers and waited.”

And sure enough, getting the results published was as easy as paying a fee. Through a simple exchange of money, fake science became real — and the media ate it up, presenting to the public as fact.

“The computer can compute statistics for you, but it doesn’t make decisions” — Jodi Lapidus, Biostatistics Professor at Oregon Health and Science University

But the most shocking part here is not so much the ease at which Bohannon was able to publish his results; paying for publicity is not exactly a new concept. More surprising is the complete lack of responsibility demonstrated by these publications after they reported the faulty information, despite the exposé published by Bohannon a few weeks following the initial wave of publications.

“Try to find a single retraction or apology from any of the magazines or newspapers that covered my chocolate study credulously — I only know of one!” Bohannon says. “If bad journalism were named and shamed more routinely, maybe that would help.”

This problem is only compounded by the innumerable pathways that the Internet has opened up for false news to spread. The ever-present “share” button can make any reader a vector for the transmission of misinformation.

Statistical illiteracy is present on both sides of the media: reporters have realized that a fake story pulls in just as many views as a real one, and readers are not equipped with the tools to tell the difference.

Because of how easy it is to share media on the Internet, a piece of false information can be spread through social media exponentially, misleading thousands around the world before anyone can rebut it with evidence proving otherwise. This can lead to a situation where people will believe a statistic because it has been so widely shared and, therefore, continue to share it. This case is known as argumentum ad populum or “appeal to the people.”

“The problem today is how quickly things can get distributed,” says Charles Du Mond, Vice President of Biometrics at Relypsa Inc., a Redwood City based biopharmaceutical company. “If you post something that is wrong, it gets immediately spread to people on your friends list.”

Fortunately, there are a few steps one can take to avoid being misled by statistics. The first, according to Du Mond, is to initially doubt every statistic one comes across and let the research prove itself to be true beyond reasonable doubt.

“I think it is important to be skeptical and engage in a discussion with your friends or the person who is quoting it whether it [the statistic] is meaningful or not,” Du Mond says.

By taking a moment to ponder what the statistic is really saying, even when emotion-invoking statistics are introduced into the picture, a reader can overcome the surprising nature of the number by seizing control of rational thought.

“A lot of people will try to quote a statistic and to try to engage you emotionally, and there are plenty of things to be outraged about,” Du Mond says. “But you have resources like Google to check them. So not checking before you retweet or repost is just irresponsible.”

“If bad journalism were named and shamed more routinely, maybe that would help.” — John Bohannon, Science Journalist

Tracing the origin of a statistic is important because it can help a reader detect sources of possible bias. When doing research, a reader should look for who the data is coming from and how they collected the data.

“Advocacy organizations, such as PETA or NARAL or the NRA, have an interest in presenting information that supports their viewpoints,” says Jocelyn Dong, Editor-in-Chief of the Palo Alto Weekly. “Statistics promoted by those organizations might not have been derived from an unbiased methodology.”

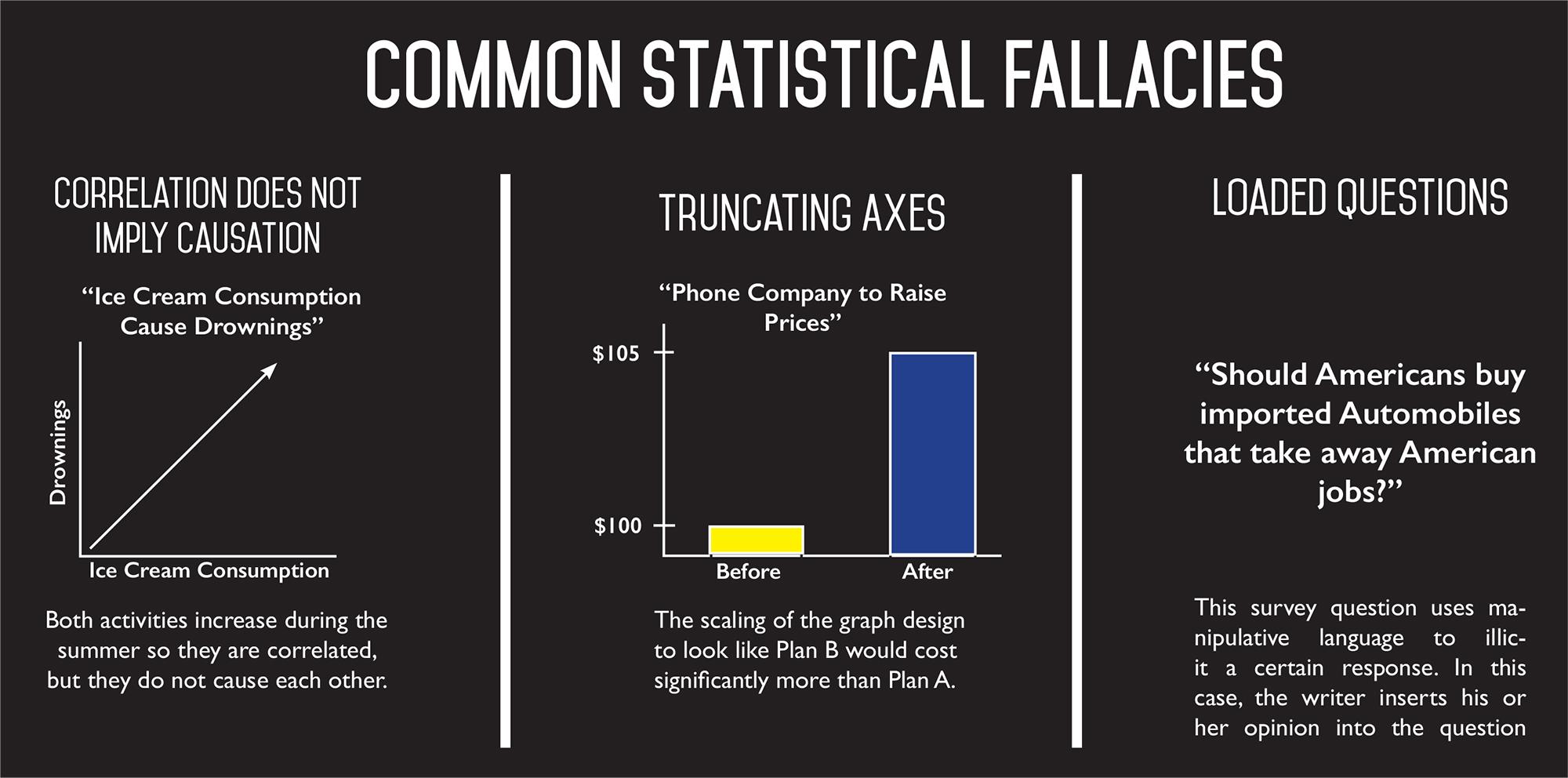

In many ways, this inability or laziness to fact check exhibited both by the public and journalists has continued to perpetuate statistical fallacies in the media. In an age where technology has allowed us to access large amounts of accurate information and data at our fingertips, we have no excuse for this ignorance.

“Take an extra 10 minutes and plug it into your search engine and look and see if there is really truth,” Du Mond says. “Everything that has ever been done is in your reach in 10 minutes or less. You can use data to your advantage.”